As generative AI liberates more senior software developers from routing coding tasks, many other elements of this automation need consideration

When AI is trained to generate software code, that code inherits any security vulnerabilities contained in the training data.

Therefore, while generative AI (GenAI) is speeding up software development, it is also changing the landscape of how code quality and compliance checking has to be tightened to avoid AI bias and other forms of unwanted results.

According to Kelvin Lim, Senior Director of Security Engineering, Software Integrity Group, Synopsys, the following points are relevant to teams delving into GenAI code development.

Three key benefits

Increased productivity: Leveraging GenAI on routine coding tasks saves time and resources.

Streamlining of the development process: GenAI solutions can generate codes based on the user’s prompt. This has made it easier for developers with different coding skill levels to “write” code.

Increased accessibility to citizen programmers: The technology is allowing non-professional programmers to develop applications to meet the needs of their own field of expertise without having to rely on trained programmers that have to spend much time understanding the complexities of a project.

Vulnerabilities that have surfaced

If the training data (the large language model: LLM) is biased towards certain demographics, ideologies, or cultural norms, the AI may produce code that reflects and perpetuates these biases. This can lead to discriminatory outcomes. Other types of latent risks and vulnerabilities:

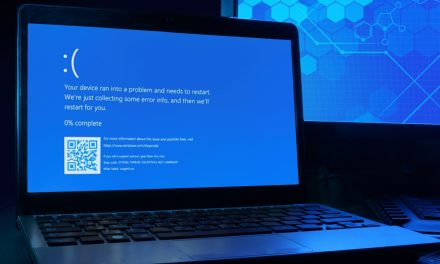

Unintended consequences: GenAI may produce code that behaves unpredictably in certain situations, or interacts unexpectedly with other components of a software system. These unintended consequences could result in system failures, performance degradation, or other undesirable outcomes.

Lack of accountability: It may be challenging to assign responsibility when issues arise from AI-generated code. Traditional methods of accountability, such as code reviews and audits, may not be sufficient for ensuring the reliability and safety of AI-generated software.

Ethical dilemmas: AI-generated code may raise ethical dilemmas regarding the ownership of intellectual property, the impact on employment in the software development industry, and the societal implications of automating certain aspects of software development.

Three inherent security vulnerabilities

GenAI coding may work (at first appearances), but it can contain security vulnerabilities.

Challenges in development quality assurance and testing: Developers may not make the effort or be able to manually check and fully understand the hundreds or thousands of lines of generated code as well as their manually added code.

Data poisoning: GenAI works by learning from training models. Malicious actors can poison the training data by injecting malicious code samples that can lead the AI to generate code ridden with security vulnerabilities.

Over-reliance on AI: Over time, developers may lack practice and their coding skills may deteriorate in subtle ways that impede their ability to identify security issues and other problems in the code.

Four ways to address GenAI coding

Leverage application security tools to scan all code for security vulnerabilities.

Understand the limitations of GenAI code generation and establish stringent processes of reviewing the code before it is passed.

Maintain and upgrade the skills of developers by striking a balance between when they need to manually code certain portions and when they can relegate routine work to AI automation.

Generative AI can be used on routine and repetitive coding tasks while human coders can be deployed to code bespoke and intricate segments of the application.

Given the rapid pace of innovation in AI, what does Lim anticipate in the field of software development? “The use of GenAI to code will continue to increase. There will be a shift in programming trends where programmers will move towards designing the overall architecture of the application, with GenAI performing most of the coding tasks. Application security vendors will continue to develop and enhance solutions to help developers secure their AI-generated code,” he said.