Experts from two sides of the fence argue for and against the floodgate of uses and abuses that the technology unleashes

OpenAI’s chatbot ChatGPT is currently widely regarded as the breakthrough stage of generative AI development.

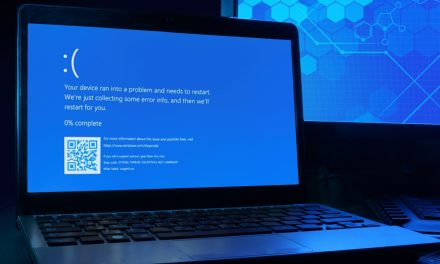

While the rest of the world is reveling in the newfound power of using generative AI for tasks such as code writing, copywriting, content creation, customer support and written reports, malicious users are also exploiting its capabilities to improve malware attacks, social engineering and phishing campaigns.

According to one observer, Ashish Chaturvedi, Practice Leader, HFS Research: “We do not believe that ChatGPT directly threatens cybersecurity. However, because it is a language model, it provides an easier way to generate malicious code and phishing emails that can aid cyber attackers.”

Chaturvedi went on to say that these anomalies would eventually be fixed.

Two sides to the risk profiles

On the other side of the risk analysis coin, Mrinal Rai, Principal Analyst, ISG, opined: “As far as threats in ChatGPT are concerned, our research indicates that threat actors may be able to utilize this technology to generate potentially harmful code. Any new technology that creates opportunities also has its own set of threats,” alluding to concerns about plagiarism and intellectual property theft.

Researchers from Check Point have discovered, for instance, that some cybercrime forums are using ChatGPT to produce malware. Participants in a hacking forum have started a thread in which they share screenshots of Python code generated by the language model. In a similar vein, many experts in cybersecurity believe that ChatGPT can be used to create credible content for phishing attacks and social engineering tactics.

One cybersecurity company, WithSecure, has noted in a report that hackers are using ChatGPT to make phishing and spear phishing emails more impactful. Most of the time, cybersecurity experts tell users to look for spelling, grammar, and other errors to differentiate between a real email and a phishing scam. However, ChatGPT-generated content can minimize the differences, increasing the likelihood of people falling for the scam.

More AI power, more evasive cyber threats

Concerns over the potential explosion of false information generated by ChatGPT have sparked concerns around the world. Misinformation of this kind can be used by cybercriminals to mislead people about a variety of topics, making them vulnerable to a variety of threats.

Another danger brought on by the easy creation of such false information is ‘trolling’. Trollers have already complicated the ongoing ideological conflicts between peoples regarding the Russia-Ukraine war, Brexit, and various elections and political campaigns. This kind of activity is likely to get worse in the coming years because of ChatGPT, some experts argue.

The technology known as “deepfakes” has already raised concerns worldwide. While the fake audio and video materials are already convincing enough on their own, with generative AI such as ChatGPT thrown in, the actual rubric and believability of the content will be enhanced manifold to catch more people off guard.

Proceed with extreme caution?

Given the unquestionable usefulness of generative AI, the world is likely to embrace the technology with open arms to relieve themselves from tasks that a machine could take over.

However, as an evidently double-edge sword, such a technology could also entice ordinary people to abuse the newfound communication power.

Even though generative AI as a technology will develop over time, it is necessary to manage risks during the transition period. As the worldwide competition to outdo ChatGPT gushes on, leaders will need to be held accountable for training and controlling the AI and ML algorithms with balanced and accurate information, to produce bias-free and culturally sensitive responses, and to be put on guard against requests for assisting in questionable agendas and even attempts to create biased self-learning.

Only when placed under constant vigilance and a strong cybersecurity and ethical framework, will advances in generative AI lead to maximum benefits and minimal repercussions.