Cyber hackers or car thievesmay evenexploit its new-fangled generative AI chatbot to help you part ways with your ride…

At this year’s CES, one car manufacturer showcased the integration of their ChatGPT-based chatbot into their product lineup.

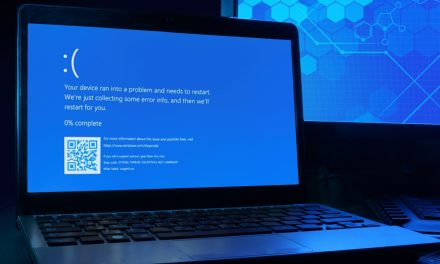

However, just like how the early days of ChatGPT saw hackers abusing the technology to create and improve malware and hacking tools, some cybersecurity experts are concerned that the use of generative AI in automobile operation may lead to teething pains and cyber trouble.

For example, according to Dennis Kengo Oka, Principal Automotive Security Strategist, Synopsys Software Integrity Group: “A digital assistant in your car could be abused to potentially gain certain harmful information — for example, how to clone keys or run unauthorized commands — that could be harvested by attackers for various bad intentions. While deploying a digital assistant in your car would provide many benefits and definitely improve the user experience, it is also important to consider the risks,” Oka said, asserting that car manufacturers implementing generative AI in their products must limit the scope of training data used, by imposing some types of restrictions on content in responses, in order to prevent abuse or actions with malicious intent.

Oka continued: “Moreover, OWASP is a good source of information for automotive manufacturers to consider when developing their AI systems. It is important to be aware of the different types of cybersecurity concerns or attacks in order to develop proper security countermeasures,” citing five examples of generative AI cyber threats applied to car operations:

- Hackers can use a ‘Prompt Injection attack’ where they feed the AI system with certain data to make it behave in a way it was not intended for.

- ‘Sensitive Information Disclosure’ can occur if an attacker is able to extract specific IP-related data or privacy-related data.

- The AI model itself can also be targeted through a ‘Training Data Poisoning attack’ where it becomes tainted due to being trained on incorrect data.

- There is also a concern of ‘AI Model Theft’, where attackers can reverse-engineer or analyze the contents of the model.

- Additionally, some studies have shown that AI systems generate appropriate content 80% of the time but 20% of the time the content is just invalid or ‘AI hallucinations’.

Car buyers will need to consider these generative AI cyber threats before they fork out money to buy such latest critical car automation technologies.