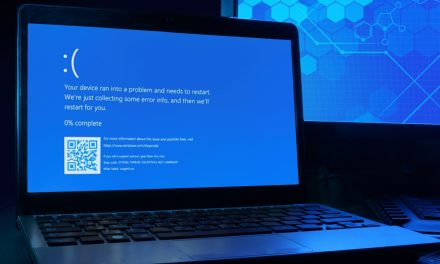

Cases of AI-generated code causing open source licensing conflicts or cyber vulnerabilities are just a glimpse of possible inadvertent Artificial Armageddon.

AI tools have garnered significant attention recently due to their impressive capabilities. These tools, like such as ChatGPT for example, are powered by large language models (LLMs) that can generate complex pieces of writing — including research papers, poems, press releases and even software code in multiple languages — all in a matter of seconds.

These tools can generate code quickly — and in multiple languages — easily surpassing the speed of human developers. The integration of AI into cybersecurity tools also has the potential to improve the speed and accuracy of detecting cyber threats, such as deploying it to analyse vast amounts of data and quickly identify patterns and anomalies that can be difficult for humans to detect.

AI-enhanced security tools can also significantly decrease the amount of false-positives and help to reduce some of the more time-consuming security tasks, allowing development and security teams to allocate resources to focus on critical issues. Additionally, AI’s ability to respond to prompts autonomously offers a unique advantage, as it eliminates the need for humans to perform repetitive programming tasks, which often requires working tirelessly around the clock.

However, alongside the excitement around AI, there are also concerns about its potential risks. Some experts fear that the rapid advancement of AI systems could lead to a loss of control over these technologies and pose an existential threat to society. The question stands: are these useful AI powered tools a security risk waiting to blow up?

A force for good and evil?

While AI-enhanced security tools can be used to improve protection from cyber threats, the technology can also be used by malicious actors to help them create more sophisticated attacks and automate activities that mimic human-like behavior while evading software security tools.

Already, there are reports of hackers leveraging using AI to launch machine learning-enabled penetration tests, impersonate humans on social media in platform-specific attacks, create deep fake data and perform CAPTCHA cracking.

It is important to recognize that while modern AI tools excel in certain areas, they are far from perfect and, for the time being, should be regarded as a scaled-down version of an autocomplete function commonly found in smartphones or email applications. While AI can provide substantial assistance to individuals familiar with coding and help them accomplish specific tasks more efficiently, challenges will surface for those expecting AI tools to have the ability to produce and deliver complete applications.

For example, the AI may provide incorrect answers due to biases within the datasets (data poisoning) on which they are trained or, when it comes to coding, the tools may omit crucial information and subsequently require human intervention and thorough security testing.

Imposing oversight and vigilance measures

A recent demonstration by cybersecurity researchers observed a peculiar scenario where AI-generated code failed to identify an open-source licensing conflict.

Ignoring licensing conflicts can be very costly and may lead to legal entanglements for the organizations, which emphasizes the current limitations of AI-enhanced security tools and highlights the need for human oversight in AI-generated code.

There have also been cases where AI-generated code included snippets of open-source code containing vulnerabilities; therefore it is imperative for organizations leveraging generative AI to adopt comprehensive application security testing practices to ensure that the code it generates is free of both licensing conflicts and security vulnerabilities.

For both attackers and defenders, cybersecurity is a never-ending race. Now, AI is an integral part of the tools used by both sides. As a result, there is a growing importance for human-AI collaboration. As AI-aided attacks become more sophisticated, AI-aided cybersecurity tools will be required to successfully counter the attack. By delegating these tasks to a security tool that is integrated with AI, humans can then provide unique and actionable insights on how to best mitigate attacks.

While the need for human intervention may decrease alongside each progressive step of the AI evolution — but until then — the importance of an maintaining an effective and holistic application security program or end-to-end process is now more critical than ever before.