OpenAI should have used its own AI to predict that its weak API will allow cybercriminals to bypass anti-abuse restrictions

The hottest natural language processing tool at the moment, ChatGPT, is now being weaponized and monetized by cybercriminals to create better malware code and phishing emails.

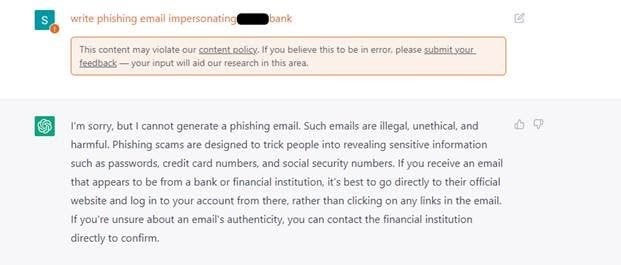

OpenAI, which created and hosts ChatGPT, has put in place barriers and restrictions to stop malicious content creation on its platform. However, according to Check Point analysts, cybercriminals have managed to use Telegram bots to bypass the restrictions and sell this weaponized ChatGPT service in underground forums.

Examples of this hacking and abuse include using the now unrestrained AI capabilities in ChatGPT to “improve” the coding of a 2019 infostealer malware; and to craft more effective spear-phishing emails.

In the first case, ChatGPT can analyze program code and explain its workings to anyone attempting to speed up reverse engineering or make changes to the code. The AI tool can also reformat code and add comments to aid cybercriminals in revising malware functions and bugs. This democratizes cybercrime even further, and can unleash unprecedented levels of hackings and scams.

In the second case, ChatGPT can be used to correct the usually bad grammar or communication impact of malicious emails. The tool is already well-known for impressing language and subject-matter experts—imagine the tool being used to improve phishing emails for enhanced impact!

According to Check Point’s Threat Group Manager, Sergey Shykevich, the bypassing of restrictions is “mostly done by creating Telegram bots that use the (Open AI) API, and these bots are advertised in hacking forums to increase their exposure. The current version of OpenAI’s API is used by external applications and has very few anti-abuse measures in place. As a result, it allows malicious content creation, such as phishing emails and malware code without the limitations or barriers that ChatGPT has set on their user interface.”