AI adoption has reached an inflection point in enterprise infrastructure, and securing the AI ecosystem is now the new cybersecurity frontier for organizations.

Just as virtualization transformed compute and cloud-redefined networks, AI is now woven into the fabric of enterprise systems.

Yet this rapid integration has created a new class of vulnerabilities across AI infrastructure – spanning models, data pipelines, and orchestration environments. Each component, if unprotected, can become a potential entry point for compromise, demanding a fresh approach to resilience and governance.

Kyndryl’s 2025 Readiness Report underscores the urgency: only 31% of organizations globally say they are “completely ready” to manage external business risks. Even more concerning, 60% of leaders admit they struggle to keep pace with the velocity of technological change.

This readiness gap exposes enterprises to cascading risks — not only from traditional cyberthreats, but from AI-assisted attacks that can adapt, learn, and automate compromise at scale.

The AI-enabled threat landscape

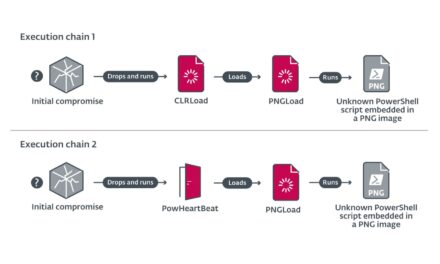

The security community has long accepted that automation benefits both sides of the battlefield. Threat actors are now integrating LLM-driven reconnaissance, adaptive phishing, and model-based intrusion techniques into their toolkits. Adversaries can generate polymorphic malware, automate vulnerability discovery, and even manipulate datasets to evade detection.

Across APAC, Kyndryl and industry partners have observed the emergence of AI-assisted “campaign frameworks” — reusable malicious models capable of generating spear-phishing content, modifying payloads in real time, and orchestrating multi-stage attacks without human intervention.

For defenders, the response must be equally algorithmic. Security operations centers (SOCs) are beginning to integrate AI-driven telemetry correlation, drift detection, and automated containment into their pipelines. However, securing AI itself — the models, training data, inference APIs, and orchestration systems — remains an unfilled gap in most organizations’ architectures.

From compliance to continuous assurance

Securing AI cannot rely on legacy compliance frameworks. Enterprises must embed secure-by-design principles throughout the AI lifecycle — Singapore’s Guidelines on Securing AI Systems (2024) from data acquisition to model decommissioning offer a globally relevant framework that technical leaders across APAC can adapt.

At a minimum, organizations should operationalize six lifecycle controls:

- Ownership and Risk Profiling: Assign accountable owners for every model, classify risk by data sensitivity and criticality.

- Data & Model Hygiene: Apply encryption, enforce separation between training and production, implement access controls at dataset and model layers.

- Adversarial Testing: Conduct “red team” simulations to detect model poisoning, prompt injection, or bias exploitation.

- Visibility and Bill of Materials: Maintain a model and software bill of materials (MBOM/SBOM) to trace every dependency and library version.

- Runtime Observability: Log inference requests and outputs, monitor anomalies, and flag deviations in model behavior.

- Incident Response and Retirement: Integrate AI systems into existing IR playbooks and securely decommission outdated models.

These practices shift AI security from a compliance checklist to an engineering discipline — one grounded in visibility, automation, and iterative assurance.

APAC’s distinct challenge: hybrid complexity

For APAC enterprises, securing AI is further complicated by hybrid architectures. Organizations in Singapore, Malaysia, Indonesia, and the Philippines commonly operate multi-cloud and edge deployments across jurisdictions with varying data sovereignty rules. According to Kyndryl’s Readiness Report, 44% of global leaders are reevaluating data-governance policies, 41% are considering data repatriation, and 65% of organizations have made changes to their cloud strategies in response to new geopolitical pressures.

Each of these shifts expands the AI threat surface: training data stored across jurisdictions, models deployed on the edge, and vendors integrated into supply chains. The result is a mesh of interdependent risks that require consistent governance frameworks and real-time telemetry correlation.

To close these gaps, enterprises must invest in end-to-end observability that connects cloud workloads, data pipelines, and AI endpoints for real-time correlation. Security and governance policies should be codified through Infrastructure as Code and ModelOps, ensuring consistency across hybrid environments. At the same time, zero-trust principles must extend to model APIs and inference layers, while AI-specific threat-intelligence sharing across industries and regions becomes essential to anticipate emerging exploits.

Cyber resilience as a board and engineering imperative

The CISO’s remit is expanding beyond data protection to include model integrity and algorithmic reliability. Modern incident-response plans must now account for scenarios like model drift, prompt leakage, or training-data compromise. The metrics of resilience are evolving, too — from MTTR (Mean Time to Recovery) for systems to MTTM (Mean Time to Mitigate) for AI incidents.

As Singapore’s recent breach of critical infrastructure revealed, cyber resilience must integrate governance, engineering, and operations. Technical teams should partner with compliance, HR, and finance to conduct joint readiness drills — because AI incidents will not respect departmental silos.

The road ahead: building secure AI infrastructures

AI will be embedded into every layer of enterprise infrastructure — from network optimization to predictive maintenance. For APAC organizations, the imperative is clear: secure the models before they secure the market.

In a hyper-connected region defined by diverse regulatory environments and fast-moving digital ecosystems, resilience depends on engineering discipline. The frontier of cybersecurity is no longer just the perimeter or supply chain — it is the model layer itself.

Enterprises that catalog, test, monitor, and govern their AI assets as rigorously as their codebases will be the ones that thrive. Because in the age of intelligent automation, the only sustainable advantage is secure intelligence.