As C-level executives try to understand AI technology and its risks, they must also make critical decisions about how and to what degree AI should be implemented.

Okta’s report “AI at Work 2024: C-suite perspectives on artificial intelligence”, unveiled a picture of the dawning of a new AI era in the workplace.

Along with the opportunities for innovation and productivity, the new era of AI brings with it new security and privacy concerns.

As AI/ML moves from the backend of the tech stack to customer-facing GenAI applications, how should C-suite leaders in Asia Pacific organizations decide how much AI adoption is too much, where are the relevant use cases, and what risks need mitigation?

CybersecAsia discussed some key findings of the Okta report with Ben Goodman, Senior VP and General Manager, Asia Pacific and Japan, Okta.

What are the emerging security threats and data privacy concerns for APAC organizations?

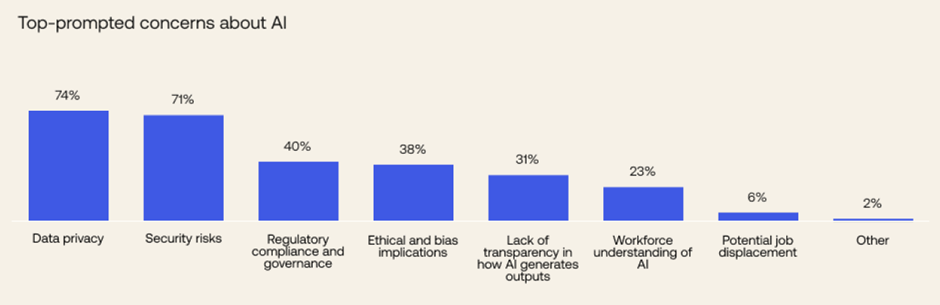

Ben Goodman (BG): AI is a frequent backdrop of my daily conversations with business leaders, customers and partners across Asia Pacific and Japan. The promise of AI to transform businesses and change how people work is a constant theme, as are concerns about a new generation of security threats and data privacy concerns that are emerging. Okta’s recent AI at Work 2024 survey of C-Suite leaders globally revealed data privacy (74%) and security risks (71%) as the top two concerns about AI adoption.

Just as legitimate businesses are using generative AI to scale quickly and boost productivity, so are bad actors. With generative AI, it’s easier than ever for cybercriminals to separate people and companies from their money and data. Low-cost, easy-to-use tools coupled with a proliferation of public-facing data (i.e. photos of individuals, voice recordings, personal details shared on social media, etc.) and improved computation to work with that data make for an expanding threat landscape.

AI’s automation capabilities also mean that bad actors can more easily scale operations, such as phishing campaigns, which until recently were tedious, manual, and expensive undertakings. As the volume of attacks increases, so does the probability of an attack’s success, the fruits of which are then rolled into more sophisticated cybercrimes.

Someone with no coding, design, or writing experience can level up in seconds as long as they know how to prompt: feeding natural language instructions into an AI large language model, or LLM (think ChatGPT), or into a text-to-image model to elicit the creation of net-new content.

How is AI impacting security, innovation, and operational efficiency for organizations in APAC?

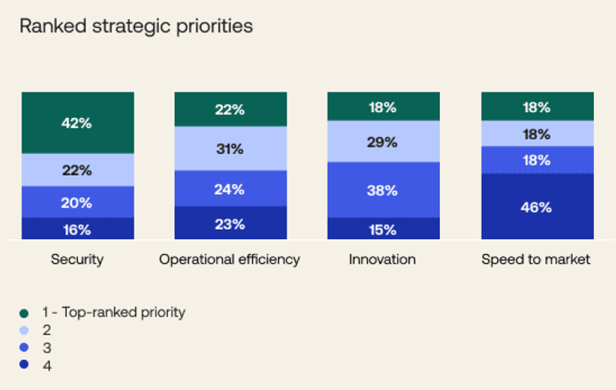

BG: We asked executives in the AI at Work 2024 survey to rank their strategic priorities – from security and operational efficiency to innovation and speed to market when it comes to AI.

Across the board, respondents coalesced around security. Some 42% of all executives surveyed tap security as their top strategic priority, followed by operational efficiency at 22%. When broken down by role, however, the survey reveals that executive groups show a preference for different strategic priorities: security for CSOs/CISOs, speed to market for CTOs, and operational efficiency for CIOs.

Leaders at larger companies tend to rank security as their most important strategic priority. That priority shifts slightly for smaller companies: Executives at businesses with fewer than 500 employees distribute their priorities more evenly among operational efficiency, innovation, and speed to market, with security being less of a focus.

Respondents from the technology industry tend to rank security as their most important strategic priority, followed by those in finance and banking and professional services.

While security is the top priority for executives in Europe, the Middle East and Africa (EMEA) and the Americas (AMER), Asia Pacific (APAC) leaders placed innovation as the top priority. While this focus on innovation is admirable, as business leaders, we need to be conscious of AI’s massive impact on security and data privacy. If we don’t, our innovation agenda will be put at risk.

How do executives fare on their levels of AI understanding and expertise?