The world now knows how cybercriminals love the chatbot’s power to create chaos; but it is actually a double-edged sword …

Having witnessed the wonders of the generative AI chatbot ChatGPT revision 3.5 (and now into rev 4) and also the ways that cybercriminals have been poking into the flaws of the system to abuse the AI power, the public also has to know of the good news: the bot can be used by the good guys to boost cybersecurity, too.

In three different ways, Sophos X-Ops experts have demonstrated three ways to use GPT-3’s large language models to:

- simplify the search for malicious activity in datasets from security software

- filter spam text more intelligently

- speed up analysis of “living off the land” binary (LOLBin) attacks

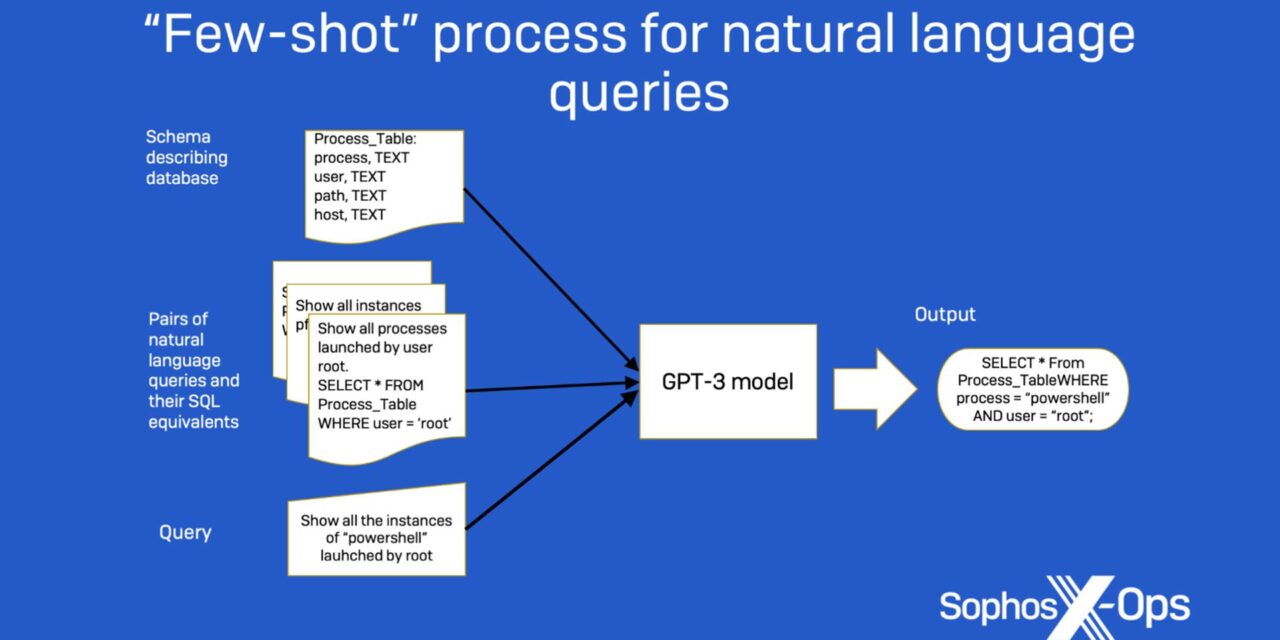

All three prototype use-cases deploy a technique called “few-shot learning” to train the generative AI model with just a few data samples, reducing the need to collect a large volume of pre-classified data.

Three use cases in detail

In the case of using the chatbot to search for malicious activity in datasets from security software, experts used a natural language query interface (involving ChatGPT-3) for sifting through malicious activity in security software telemetry, then tested the model against its endpoint detection and response product.

With this interface, defenders were able to filter through the telemetry with basic English commands, removing the need for defenders to understand SQL or a database’s underlying structure. At scale, using ChatGPT to sieve through the security software telemetry for malicious activity could be much faster and intuitive than manual labor.

-

In the case of using a generative AI model to filter spam text, Sophos experts found that, when compared to other machine learning models for spam filtering, a spam filter using GPT-3 was significantly more accurate.

While machine learning has been used for spam text detection in the past via different types of models, the researchers found that GPT-3 significantly outperformed other traditional machine learning approaches, especially even when the amount of training data was small.

At scale, with all things being equal (that is, if spammers do not react quickly to GPT3 spam filters effectively) this could be a big disruptor to spam industry.

-

Finally, in the case of speeding up analysis of LOLBin attacks, the researchers were able to create a program to simplify the process for reverse-engineering the command lines of LOLBin attacks.

Such reverse-engineering is notoriously difficult but critical for understanding how LOLBin attacks work because they often contain obfuscation, are lengthy and are difficult to parse.

However, ChatGPT in its rev 3.5 form is already well-versed in code in many forms. GPT-3 can write working code in multiple scripting and programming languages when given a natural language input of the desired functionality. But it can also be trained to do the opposite — generate analytical descriptions from command lines or chunks of code.

So once again, researchers used the few-shot approach: with each command line string submitted for analysis, GPT-3 was given a set of 24 common LOLBin-style command lines with tags identifying their general category and a reference description. Using the sample data, GPT-3 was configured to provide multiple potential descriptions of command lines. Next, the descriptions were translated into command line entries. Finally, the resulting command lines were compared to the original: the descriptions for the ones closest to the original were deemed most accurate.

With GPT3-accelerated analysis, reverse engineering could be accelerated and improved, giving defenders greater abilities to put a stop to such types of attacks in the future (again, assuming all things being equal).

According to the firm’s principal threat researcher, Sean Gallagher: “One of the growing concerns within security operation centers is the sheer amount of ‘noise’ coming in. There are just too many notifications and detections to sort through, and many companies are dealing with limited resources. With something like GPT-3, we can simplify certain labor-intensive processes and give back valuable time to defenders.”

The firm will make the results of its research efforts available on GitHub for those interested in testing GPT-3 in their own analysis environments.